When thinking about language models, my mind often wanders back to the idea that after training the model, we can prune a considerable amount (e.g. 30-40%) of the weights without any detrimental effects to the model performance.1 This is in strong contrast to the mental model I had when I started learning ML - in my mind the massive trainings that go into these systems saturate all possible "bandwidth" the model has to offer.

Part of the reason might be2 that we can avoid bad initializations this way. In transformer models it seems that sometimes we get, within a single transformer block, attention heads that replicate each others' function or don't do anything meaningful. The theory here is that a bad initialization might create a situation where the attention head cannot converge to anything useful. So in practice it's better to initialize 12 attention heads and get 5-10 of them working, even with some overlap, than initializing just a few and risking that many (or all) of them do not work.

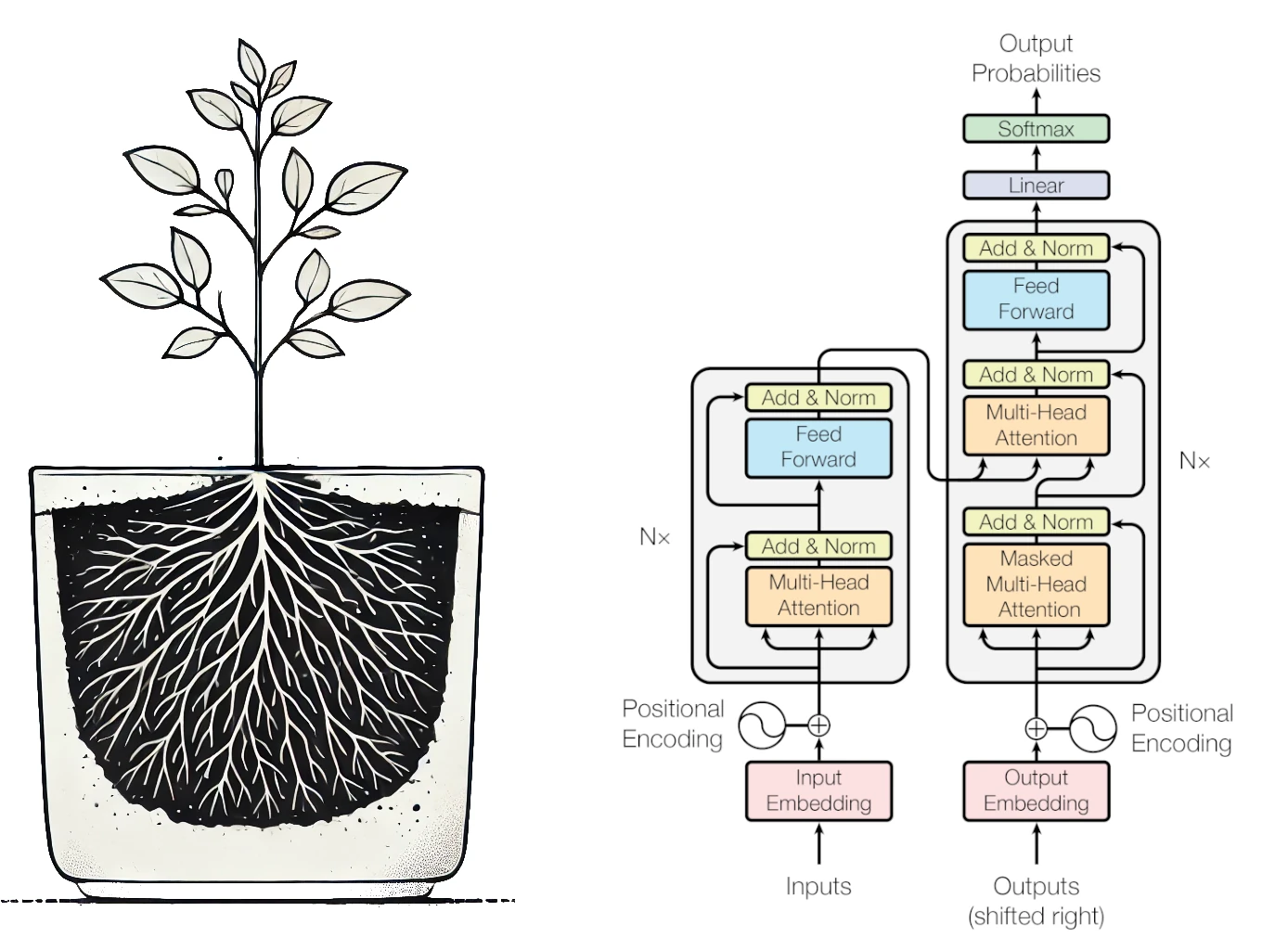

In any case, I've been recently thinking this as a potted plant. When I plant my indorr plant to a pot, I don't actually think that the roots of the plants will fill the the whole pot volume. (Though sometimes they do.3) Instead what I feel happens is that the pot simply provides a place for the plant to grow a root system that fits the situation. Maybe this is what happens with the transformers as well? There is little reason to assume that the structure of (human) language is well approximated by the BERT transformer architecture. But perhaps the architecture is big and fertile enough to provide a place for BERT to grow the structure it needs?

-

The "30-40%" number is from Gordon, Duh and Andrews. See also the BERTology paper by Rogers, Kovaleva and Rumshisky for wider discussion. ↩

-

Prince mentions this on page 235, the Notes section of Chapter 12 under Types of attention. ↩

-

My favorite Wikipedia page, list of fractals by Hausdorff dimension, does not have plant roots in it, but they do list the Lichtenberg figure which looks a bit like a root system to me. And that one has an approximated Hausdorff dimension of 2.5, so not plane filling but murch more "dimensional" than just a single 1D root. ↩