Going through the list with some thought it turned out that I had already read a few of these. So as promised here's some personal comments on the topics.

Karpathy - The unreasonable effectiveness of RNNs

I vaguely remember maybe reading this around 2020 when I was studying the fundamentals of ML. It must have been listed as extra reading in Andrew Ng's Coursera course on AI. The blog post is from 2015, just two years before the Attention is all you need paper, but the idea of the attention mechanism was already being used1 and Karpathy mentions attention as a particular promising research avenue. I didn't dive into the code provided, but in general I think this is a very nice descriptive introduction to how RNNs work. I especially liked the comparison that RNNs are to vanilla NNs like programs are to functions.

Olah - Understanding LSTM Networks

Another piece which I think I read back in around 2020, especially since while reading the post I feel that the author is explaining exactly my mental model of LSTMs which most likely means that my mental model has been built based on this text. This is a really well written piece both from a pedagogical and graphics design point of view. I recommend to read it simply to get a really good example on how to explain technical topics.

Like Karpathy's the unreasonable effectiveness of recurrent neural networs this text (also from 2015) is already mentioning the soon-to-come revolution:

LSTMs were a big step in what we can accomplish with RNNs. It’s natural to wonder: is there another big step? A common opinion among researchers is: “Yes! There is a next step and it’s attention!” The idea is to let every step of an RNN pick information to look at from some larger collection of information.

Reading both Karpathy and this brings some nice context to the rise and advance of transformers.

Vaswani et al - Attention is all you need

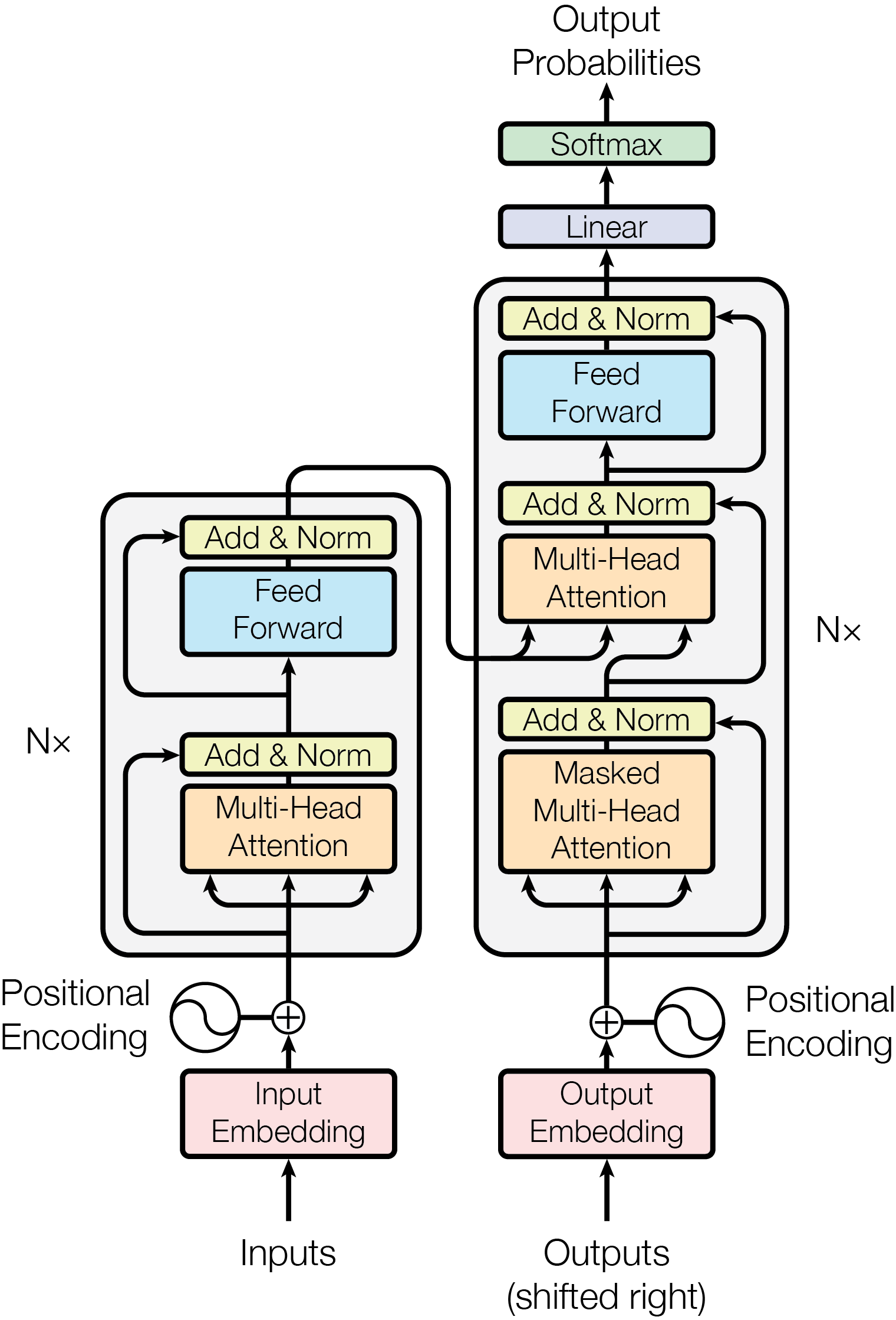

The transformer paper. The one from which you get the following generic picture of a transformer that has become pretty much the universal symbol for a transformer. (Most people will recognize its silhouette.)

I've read this very thoroughly a few times. I've skimmed it earlier, but really studied it while writing my second master's thesis on the embedding spaces in the transformer-based BERT model. Attention is all you need is somewhat technical and it is not written for the general audience. For people unfamiliar or less familiar to NLP I'd suggest starting with the Neural Networks series by 3Blue1Brown.

Aaronson - First law of complexodynamics

I'm pretty sure I read this around 2019 when I lectured an Information Theory course at JYU. At least it seemed very familiar when re-reading it now. In my lecture notes (in Finnish) that get pretty flimsy in the last week I mention Kolmogorov Sophistication (also discussed here) and cite Aaronson, Carroll and Ouellette 2014. I'm guessing that this is the same Ouellette that is mentioned in the end of Aaronson's post.

Anyway, the main idea/problem discussed here is very cool. I remember the coffee cup image vividly, and I have at times remembered to wonder the main concept. My information theory basics are a bit rusty, and I'm having some trouble to really get the technical definition of Kolmogorov Sophistication. I do remember that I understood it back in 2019, so I might refresh on the arXiv paper by Aaronson and the gang.

-

For a nice brief history of the idea of the attention mechanism, I usually like to recommend Tunstall et al. Also for more advanced NLP studies. ↩